【RL】强化学习小例子 Q table 表单 Q learning 算法

看了两天书本《深入浅出强化学习》,感觉对概念理解的还是太笼统。鉴于做中学的思想,准备找个小例子跑一跑程序,加深下理解。

找了很多,要么太难,要么太笼统。只找到了莫烦Python老师的这个小例子,不仅有代码,还有视频讲解,感觉很多,跟着大佬学一学。

0. 任务描述

使用的任务是一个1维世界,在世界的右边有宝藏,智能体只要得到宝藏尝到了甜头,以后就记住了得到宝藏的方法,这就是智能体用强化学习所学习到的行为。

-o---T # T is the position of treasure, o is the position of agent

Q - learning 是一种记录行为值(Q value)的方法,每种在一定状态的行为都会有一个值 Q ( s , a ) Q(s,a) Q(s,a),就是说行为 a a a 在状态 s s s 状态的值是 Q ( s , a ) Q(s,a) Q(s,a)。

而在探索宝藏的任务中,状态 s s s 就是 agent 的位置。而在每一个位置都能做出两个行为 a = left/right a = \text{left/right} a=left/right。

关于行为选择的判定标准:如果在某个地点 s 1 s_1 s1,智能体在计算了他能有的两个行为 a = left/right a = \text{left/right} a=left/right 后,结果是 Q ( s 1 , a 1 ) > Q ( s 1 , a 2 ) Q(s_1,a_1) > Q(s_1, a_2) Q(s1,a1)>Q(s1,a2),那么智能体就会选择 a 1 = left a_1 = \text{left} a1=left 这个行为。

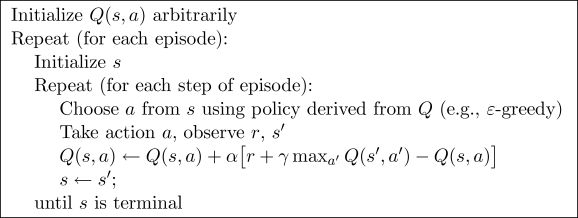

1. 伪代码

先把要实现的伪代码放出来:

简单翻译过来就是:

- 首先初始化 Q ( s , a ) Q(s,a) Q(s,a) 表

- 重复

2.1 初始化状态 s s s

2.2 重复

\quad 2.2.1 从 Q Q Q 表中根据状态 s s s 选择行为动作 a a a

\quad 2.2.2 根据行为动作 a a a,产生奖励 r r r 和下一步状态 s ′ s' s′

\quad 2.2.3 更新 Q Q Q 表 Q ( s , a ) Q(s,a) Q(s,a)

\quad 2.2.4 更新状态 s ′ s' s′

2.3 直到状态 s s s 达到结束状态

2. Python 代码实现

2.1 初始化一些参数

import numpy as np

import pandas as pd

import time

np.random.seed(2) # reproducible

N_STATES = 6 # the length of the 1 dimensional world

ACTIONS = ['left', 'right'] # actions of agent

EPSILON = 0.9 # greedy policy

ALPHA = 0.1 # learning rate

GAMMA = 0.9 # discount factor

MAX_EPISODES = 13 # maximum episodes

FRESH_TIME = 0.3 # fresh time for one move

2.2 新建一个空的 Q 表

def build_q_table(n_states, actions):

table = pd.DataFrame(

np.zeros((n_states, len(actions))), # q_table

columns=actions, # columns 对应的是行为名称

)

return table

上述代码的结果为

# print(build_q_table(N_STATES, ACTIONS))

# left right

# 0 0.0 0.0

# 1 0.0 0.0

# 2 0.0 0.0

# 3 0.0 0.0

# 4 0.0 0.0

# 5 0.0 0.0

2.3 行为选择

传入状态, Q Q Q 表

def choose_action(state, q_table):

# This is how to choose an action

state_actions = q_table.iloc[state, :]

if (np.random.uniform() > EPSILON) or ((state_actions == 0).all()): # act non-greedy or state-action have no value

action_name = np.random.choice(ACTIONS)

else: # act greedy

action_name = state_actions.idxmax() # replace argmax to idxmax as argmax means a different function in newer version of pandas

return action_name

2.4 环境反馈

def get_env_feedback(S, A):

# This is how agent will interact with the environment

if A == 'right': # move right

if S == N_STATES - 2: # terminate

S_ = 'terminal'

R = 1

else:

S_ = S + 1

R = 0

else: # move left

R = 0

if S == 0:

S_ = S # reach the wall

else:

S_ = S - 1

return S_, R

2.5 更新环境

def update_env(S, episode, step_counter):

# This is how environment be updated

env_list = ['-']*(N_STATES-1) + ['T'] # '---------T' our environment

if S == 'terminal':

interaction = 'Episode %s: total_steps = %s' % (episode+1, step_counter)

print('\r{}'.format(interaction), end='')

time.sleep(2)

print('\r ', end='')

else:

env_list[S] = 'o'

interaction = ''.join(env_list)

print('\r{}'.format(interaction), end='')

time.sleep(FRESH_TIME)

2.6 主循环

def rl():

# main part of RL loop

q_table = build_q_table(N_STATES, ACTIONS)

for episode in range(MAX_EPISODES):

step_counter = 0

S = 0

is_terminated = False

update_env(S, episode, step_counter)

while not is_terminated:

A = choose_action(S, q_table)

S_, R = get_env_feedback(S, A) # take action & get next state and reward

q_predict = q_table.loc[S, A]

if S_ != 'terminal':

q_target = R + GAMMA * q_table.iloc[S_, :].max() # next state is not terminal

else:

q_target = R # next state is terminal

is_terminated = True # terminate this episode

q_table.loc[S, A] += ALPHA * (q_target - q_predict) # update

S = S_ # move to next state

update_env(S, episode, step_counter+1)

step_counter += 1

return q_table

if __name__ == "__main__":

q_table = rl()

print('\r\nQ-table:\n')

print(q_table)